Chart Metric Value Choices (Y Axis)

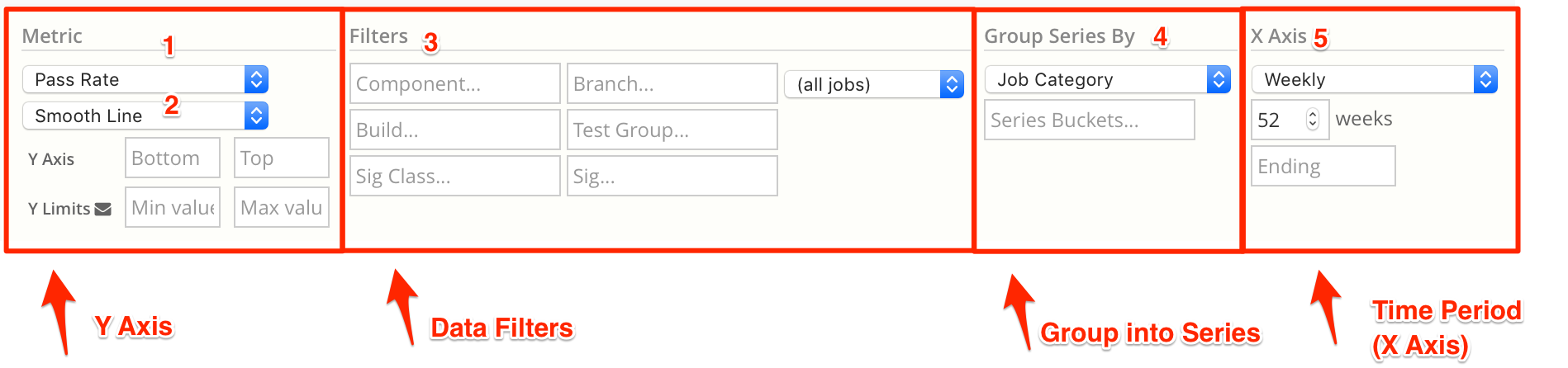

Chart Configuration overview

→ see the Y Axis box in the image above.

Simscope Charts have a variety of built-in job and regression metrics to choose from. These are grouped below.

Job Counts

| Metric | Unit | Description |

|---|---|---|

| Pass Rate | % | Average Job pass rate Calculation: #Pass/#Jobs * 100 |

| #Fail | # | Total number of failing jobs |

| #Pass | # | Total number of passing jobs |

| #Jobs | # | Total number of jobs |

| #Signatures | # | Total number of unique signatures |

| Jobs/Fail | jobs | How many jobs to run until the next fail? Inverse of Pass Rate. Calculation: #Jobs/#Fail |

Cycles

| Metric | Unit | Description |

|---|---|---|

| CPS (Pass only) | Hz | Cycles-Per-Second, among passing jobs Calculation: #Cycles/#Pass |

| CPS (Pass + Fail) | Hz | Cycles-Per-Second, among all jobs Calculation: #Cycles/#Jobs |

| #Cycles | cycles | Total cycles |

| Cycles/Job | cycles | Average cycles per job |

Note: Hz = 1 cycle per second

Compute Time

| Metric | Unit | Description |

|---|---|---|

| Compute Weeks | weeks | Total compute time (in compute-weeks) |

| Compute Days | days | Total compute time (in compute-days) |

| Compute/Job | minutes | Average compute time per job |

| Compute/Pass | minutes | Average compute time per passing job |

Compute day and week

What is a "compute-day"?

-

A compute-day is the amount of compute time for a single machine across one day (24 hours).

- Equivalent to:

cpu_time_seconds / (60 * 60 * 24) - This is useful to show how much compute time a regression takes.

- Compute days are useful for rendering daily compute charts.

For example, if a regression uses

450.8compute-days, this means if you ran all the jobs serially on a single machine, it would take 451 days to complete the regression. - Equivalent to:

-

A compute-week is the amount of compute time for a single machine across one week.

- Equivalent to:

cpu_time_seconds / (60 * 60 * 24 * 7)- One

compute-weekis equivalent to(compute-day) * 7

- One

- Compute weeks are useful for rendering weekly compute charts

- Equivalent to:

Regression Data

| Metric | Unit | Description |

|---|---|---|

| # of Jobs/Regression | # | Average number of jobs per regression |

| # of Sigs/Regression | # | Average number of unique signatures per regression |

| # of Cycles/Regression | cycles | Average number of cycles simulated per regression |

| # of Regressions Containing | # | Number of regressions containing a configuration |

| Compute/Regression | days | Total compute time per regression |

| Regression Duration | hours | Wallclock duration of a regression. Calculation: regr_finish_time - regr_start_time |

| Model Age | hours | Average age of regression model (see details below) |

Regression Metric Value

Regression Metric Value is used to generate charts based on custom regression metadata.

For example, if you store coverage values in regressions, this metric can be used to generate coverage charts.

Model Age

Model Age is calculated as:

model_age = regression_start_time - model_checkin_time

The result is in hours.

- This shows how old each regression is, compared to the model it is simulating.

Ideally, units should be running fresh models, not old models.

For example, if regression daily/127 starts at 2020-02-05T20:57:35Z

and is simulating the model abcd, which was checked in at 2020-02-05T15:50:20Z, this

has a model_age=5.1 hours

model_age = (2020-02-05T20:57:35Z - 2020-02-05T15:50:20Z) = 5.1 hours

NOTE: if

model_ageis a negative value, this is a BUG in your regression data. Model Age should always be a positive number.

Quality/Health Metrics

These are metrics which can chart overall quality / health.

| Metric | Unit | Description |

|---|---|---|

| Compute until next fail | hours | How many compute hours are needed to generate the next job fail? Higher number means higher quality design. Calculation: (compute / #Fail) |

| Compute/Signature | hours | How many compute hours are needed to generate the next unique signature? Higher number means higher quality design. Calculation: (compute / #Signatures) |

| Average cycle# at pass | cycle | Average cycle a passing job finishes at. |

| Average cycle# at fail | cycle | Average cycle a failing job fails at. |

| Cycles until next fail | cycles | Number of cycles to simulate until the next fail. Calculation: #Cycles/#Signatures |

| Cycles until next signature | cycles | Number of cycles to simulate until the next fail. Calculation: #Cycles/#Signatures |

| #Fail/Signature | # | Average number of fails within each signature |

| Median Fail/Signature | # | Median number of fails within each signature |

| Fail/Compute Week | # | If you ran a week's worth of compute time against this configuration, how many fails are expected? |

| Fail/Million Jobs | # | If you ran a million jobs against this configuration, how many fails are expected? |

| Fail/Billion Cycles | # | If you ran a billion cycles against this configuration, how many fails are expected? |